|

|

|

|

|

|

|

|

|

|

|

|

|

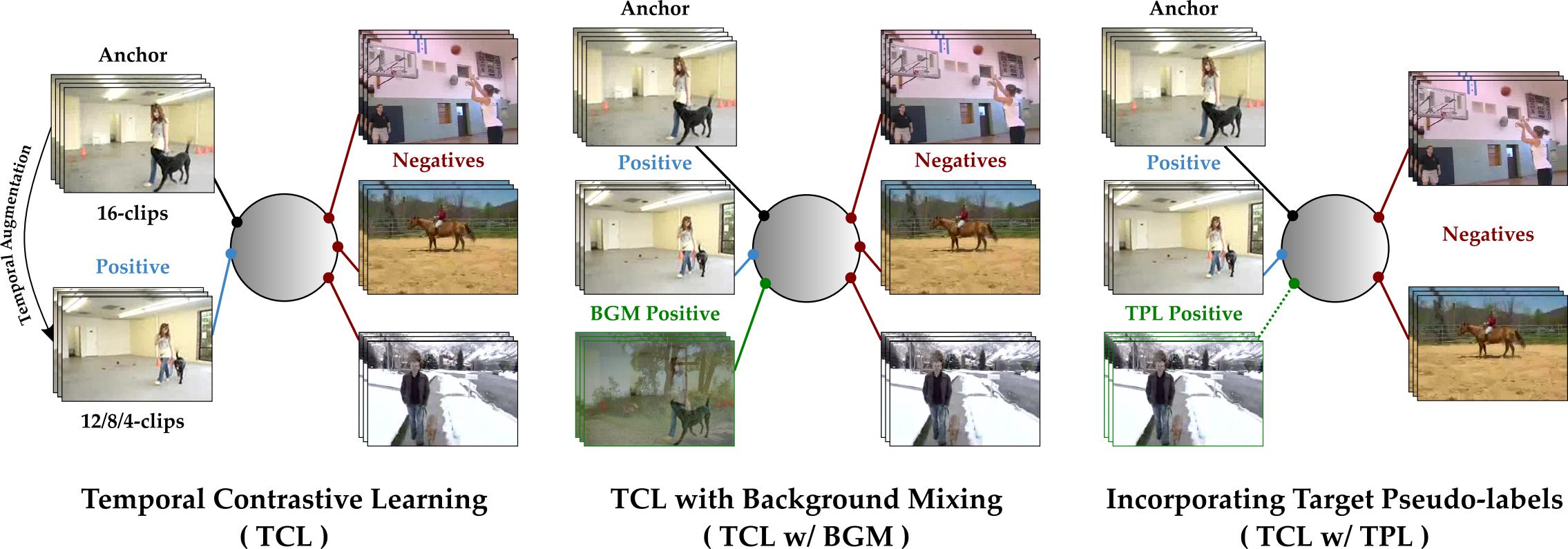

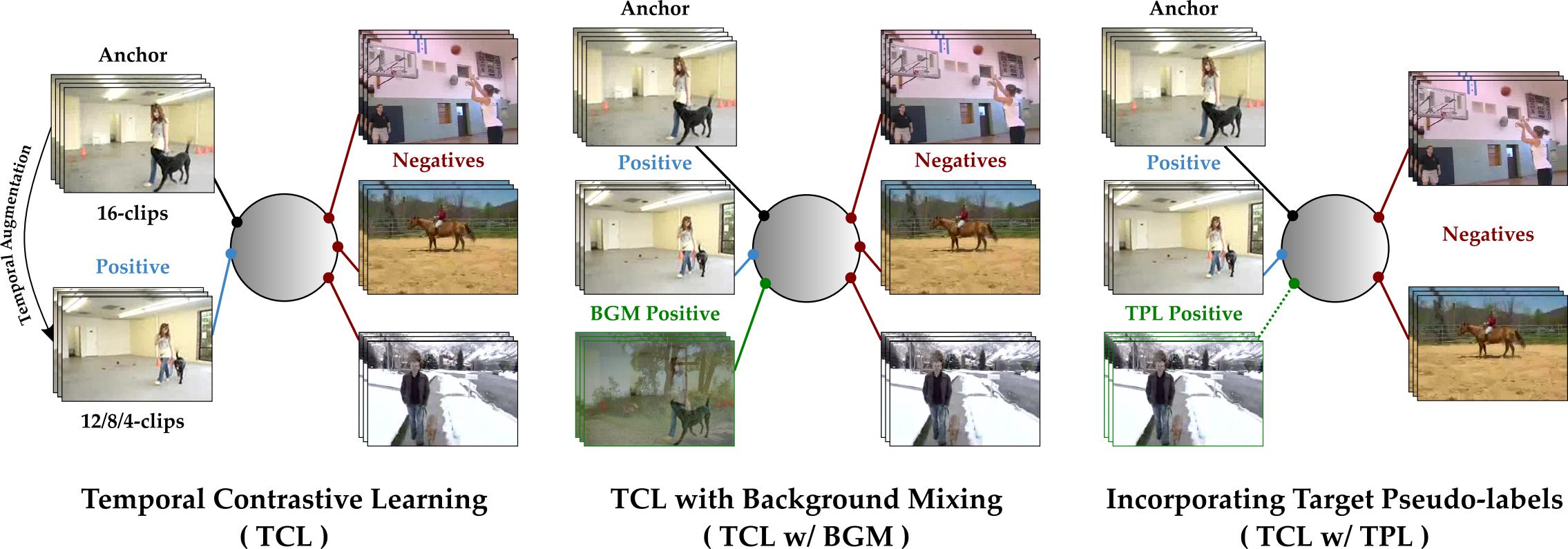

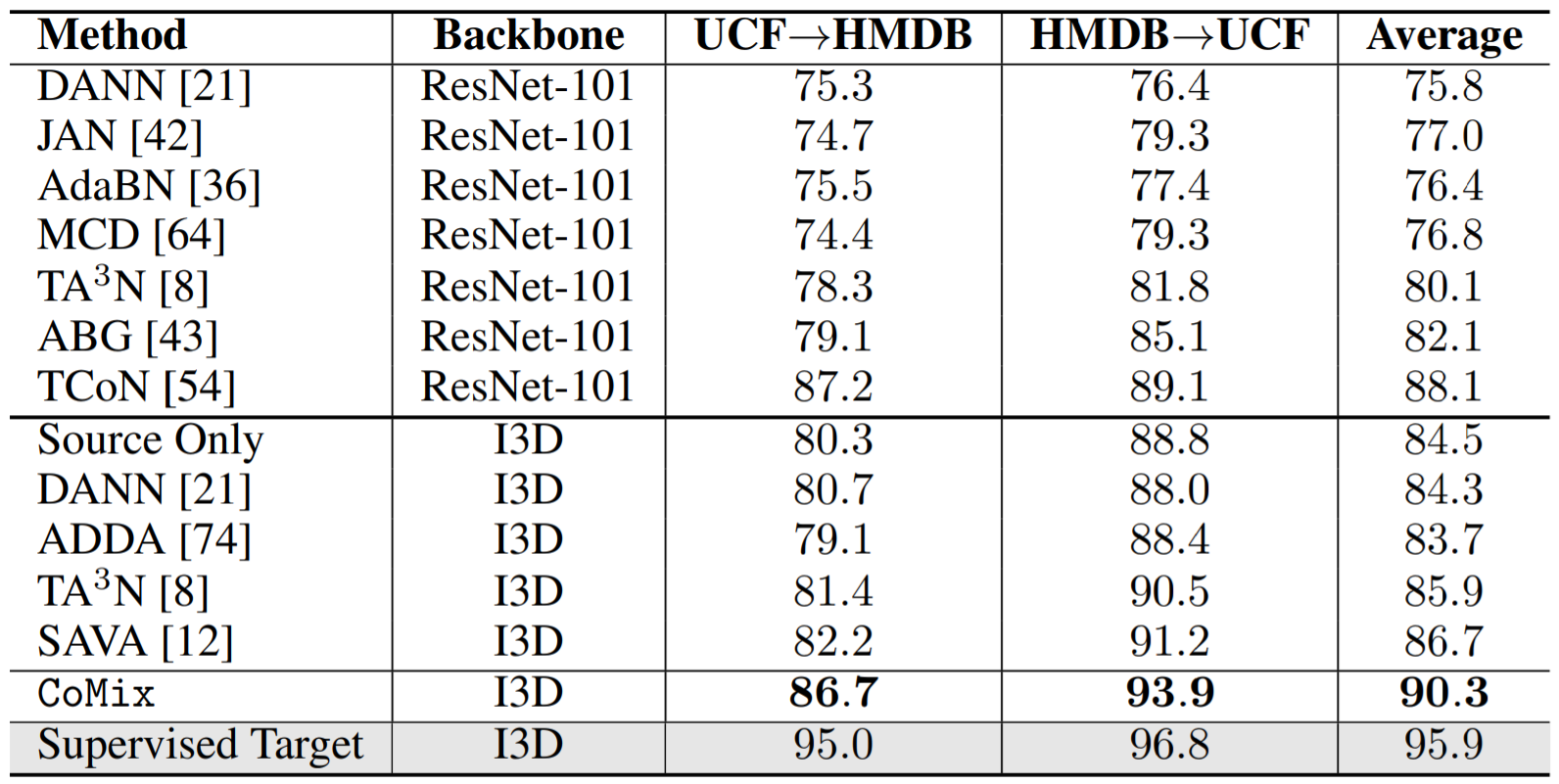

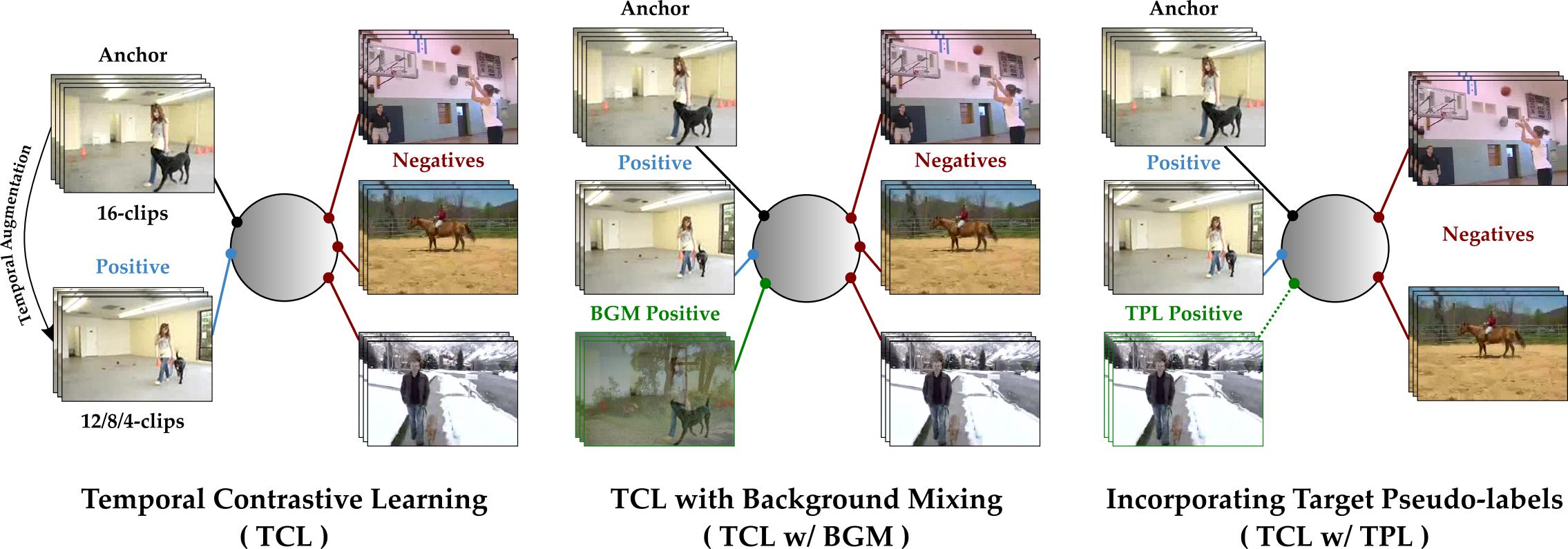

Aadarsh Sahoo, Rutav Shah, Rameswar Panda, Kate Saenko, Abir Das Contrast and Mix: Temporal Contrastive Video Domain Adaptation with Background Mixing Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS), 2021 [PDF] [Supp] [Poster] [Slides] [Code] |